Written by

Jonathan Taylor

Published on

Nov 13, 2024

How much does an AI eat

The future of artificial intelligence will not rely on an algorithmic breakthrough but a breakthrough in energy. The simple UIs bely a simple fact: the energy required to power these tools is immense.

I heard Sam Altman speaking about this topic. Here's what he said:

“We still don’t appreciate the energy needs of this technology... There’s no way to get there without a breakthrough. We need [nuclear] fusion or we need like radically cheaper solar plus storage or something at massive scale."

This got me thinking: how much energy does AI really need?

Humans struggle with understanding statistics. For instance, I don't really have a good idea of how much energy 1200 watts per hour represents (the energy required for a high-end server that would be used for an AI). Is it a lot? Is it a little?

What if AI required calories instead of electricity? How much food would an AI require to eat?

In this post, we're going to break down the energy expenditure of AI and compare it to our other favourite thinking machine, the human brain.

The Appetite of Artificial Intelligence

The Appetite of Artificial Intelligence

If you invited ChatGPT to sit down and eat breakfast with you, how much food would you need to feed it? The short answer is a lot. In my estimate, you'd need to feed your AI about 860,421 calories every hour of the day to equate its daily energy expenditure. That's about 10 million calories for one day of operation.

Assuming 3 meals a day, that'd be about 286,000 calories. For comparison, the average person needs about 2000-2500 calories per day. At the higher end, divided by 3 meals a day, that'd be 800 calories (which is a sizable meal).

To break it down further, here's what each would eat:

| Food | Human | AI |

|---|---|---|

| Toast | 2 slices | 3,813 slices |

| Bacon | 4 slices | 6,650 slices |

| Yoghurt | 1 cup | 1932 cups |

| Fruit | 2 cups | 3,714 cups |

Carbon footprint and environmental impact of AI

At about 1400 kgs, the sheer weight of the AIs meal would easily break the strongest dining room table. And you'd need to do every hour throughout the day.

The common trope in science fiction is that androids wouldn't need any food, they'd just sort of run all the time and get plugged into a wall from time-to-time.

In the real-world, the energy demands of AI are still not entirely clear. Worst-case scenario is that Google's own AI would use the equivalent energy of a small country the size of Ireland (Source: The Verge). And that's only one AI -- how many other big tech companies are developing their own AIs?

We do have a real-world proxy for a high-demand service: Google search. The extraordinary energy demands of Google search have been covered by mainstream media such as Forbes and The New York Times. According to Statista, Google used 22.29 terawatt hours in 2022. This is enough energy to power New York City for over two years.

That's a lot of energy.

Carbon footprint and environmental impact of AI

Carbon footprint and environmental impact of AI

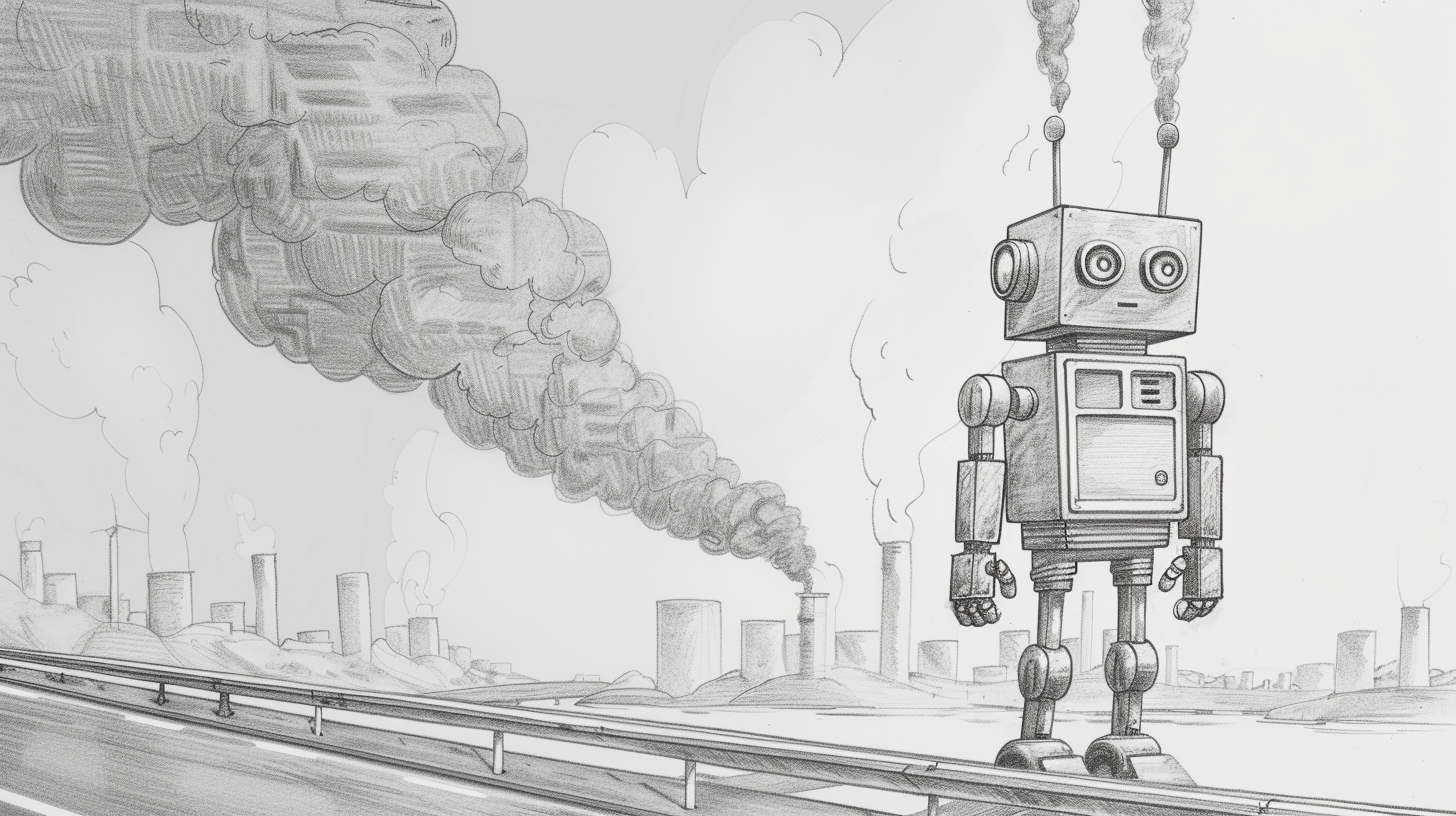

Artificial intelligence is not carbon neutral. We may have a new ally in the quest for green energy and reducing carbon emissions, but this ally's computational genius largely depends on fossil fuels. According to research from the University of Massachusetts, Amherst, training an AI model can produce the equivalent emissions of 5 cars.

The energy requirements for AI and services like Google Search are abstract – we aren't directly impacted by the energy costs. When we ask ChatGPT a question, we don't have to enter in a quarter like a payphone or smell the exhaust it creates. Yet, it clearly does have a cost. Sam Altman, conversing with Elon Musk on Twitter said as much:

average is probably single-digits cents per chat; trying to figure out more precisely and also how we can optimize it

Artificial intelligence is not carbon neutral. We may have a new ally in the quest for green energy and reducing carbon emissions, but this ally's computational genius largely depends on fossil fuels. According to research from the University of Massachusetts, Amherst, training an AI model can produce the equivalent emissions of 5 cars.

The amount of energy and, therefore, carbon emissions depends on the type of hardware used to train the model. GPT-3 is estimated to have created 500 metric tons of carbon dioxide, which is over 100 cars worth of emissions. This is in part due to the underlying technology and servers used. As a comparison, Hugging Face's LLM was trained on a French supercomputer mainly drawing from nuclear power and produced 25 metric tons of carbon dioxide.

Today, data centers produce more carbon emissions than the aviation industry accounting for 2.5% to 3.7% of global greenhouse gas emissions. These numbers are troubling because this technology is so easy to use, the cost so abstracted we don't even give it a second thought. AI is believed to require more energy than a typical Google search, and is estimated to consume 100x more energy than a single Google search.

The thinking that AI does requires a lot of energy. Let's compare how much energy goes into a human thought compared to an AI's thought.

How much energy goes into a human brain?

How much energy goes into a human brain?

We cover this topic in depth on my podcast, but a big fear for many marketers and knowledge workers is that AI will replace or change their jobs. I think this points to an underlying insecurity many of us feel when working with LLMs and other AI tools. To me, my first interactions with ChatGPT felt a little like magic.

These tools feel hyper intelligent. An AIs ability to do things like coding, researching, writing, and reasoning is incredible. It may even challenge the assumption that human intelligence is sacred and what makes us unique in the natural world.

If intelligence doesn't make us unique, what does?

What if your unique intelligence isn't just our capacity to think and reason, but it's actually how energy efficient our brains are? We have a corollary: persistence hunting. Some of our ancestors used a method of hunting that would make any Boston Marathoner proud – they'd literally run down prey over long distances until the prey collapsed from exhaustion.

The human brain makes up 2% of the body's mass yet consumes nearly 20% of our daily energy expenditure. Scientists have measured this and found the brain burns about 500 calories per day, which is about 20 calories per hour.

How much energy goes into an AI's brain?

How much energy goes into an AI's brain?

AIs don't have brains like humans and don't use calories to power their thoughts. An AI draws its thinking energy from servers stored at data centers far from your office. A typical server will use between 500 to 1200 watts per hour. A high-end server may consume even more energy, and we've seen that AI requires greater energy than procedures like Google search inquiries.

What I find fascinating is that the server itself is only responsible for a portion of the total energy required to run a data center. Cooling and thermal management is a challenge for these data centers, and the AI boom is part of the reason the industry is expected to grow at record levels over the next 5 years.

For reference, the human brain runs on 20 watts of power which could power a dim light bulb. A server uses the same amount of energy required to run a typical home. A detailed Stack Exchange thread estimates that each prompt could be about 0.3 kWh per request

For reference, the human brain runs on 20 watts per hour which could power a dim light bulb. A server, on the other hand, uses the same amount of energy required to run a typical home.

Comparing Calories: AI vs. Human Thought

Comparing Calories: AI vs. Human Thought

If this is all feeling abstract and over your head, that's ok. That's how I've felt as I've checked and rechecked my research and calculations.

The question that prompted this article was, "How much does an AI eat?"

To know this, we need an equivalent energy measure since humans can't be plugged into a wall and AI can't be fed breakfast.

That equivalent metric is joules. That metric is joules. 1 kilowatt-hour (kWh) is equal to about 3.6 million joules. 1 calorie is equivalent to 4.186 joules. So, 1 kwH is the equivalent of 860,421 calories.

That's a lot of food.

And that's just for an hour of operation. You'd need approximately 10 million calories a day to feed an AI.

To put this in perspective, that's about 122,917 almonds which would weigh about 143 kg or 317 lbs. Just imagining chewing all that food makes my jaw ache.

By comparison, you'd meet your brain's caloric needs with about 4 almonds.

If almonds aren't your thing, then let's use white rice. 860,421 calories is about 650lbs/294kg of white rice. According to the consumer price index from the US Bureau of Labor Statistics the average price of a pound of white rice is $0.99 USD. Round up to a dollar and that's about $650 an hour of white rice.

This is a lot of money to spend on groceries for an ultra-intelligent robot.

The Efficiency of the Human Mind

The Efficiency of the Human Mind

I think there's something missing in the conversation about artificial intelligence and human intelligence: the energy efficiency of the human mind. The brain is elegantly designed – it's ability to reason, emote, and do all the things that AIs can do is remarkable.

Watt-for-watt, joule-for-joule, calorie-for-calorie: the human brain is a feat of nature.

Let's not sell ourselves short when comparing intelligences.